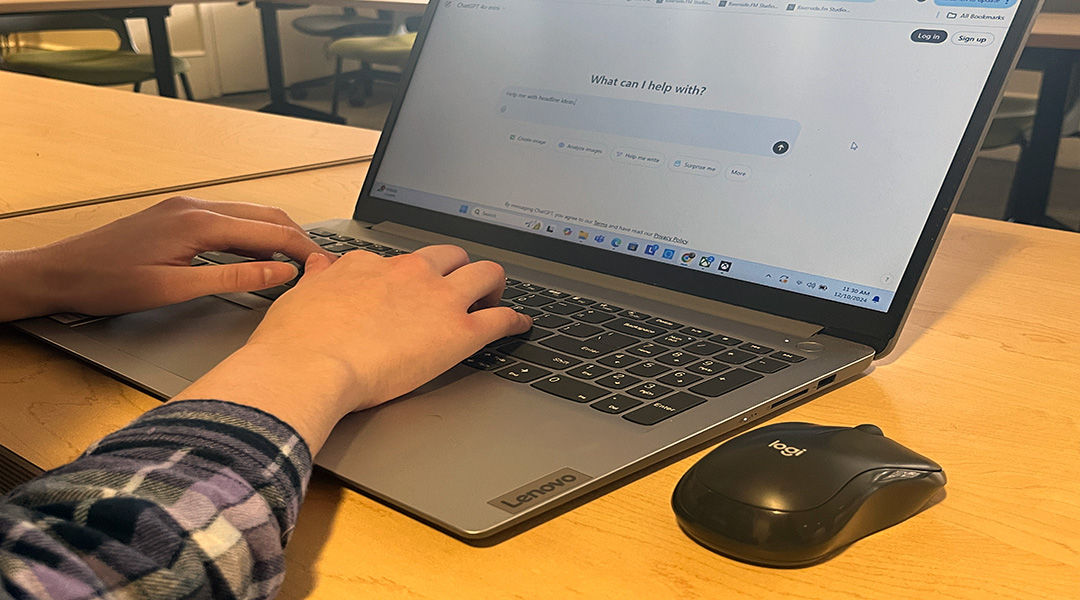

Artificial intelligence is in the communication field, but is it ethical to use it to help you create content? (Photo by Jayden Simmons/Carolina News & Reporter)

Communication is an ever-evolving field. New technology. More versatility within the workplace. The segue into new styles of communicating.

But now in the mid-2020s, the communications field is faced with an unprecedented challenge: artificial intelligence.

Tasks once performed only by humans — who rely on reasoning and creativity — can now be completed with AI programs that merely mimic human intelligence.

That sounds like it has no business in the journalism and communications field, right?

Well, to an extent.

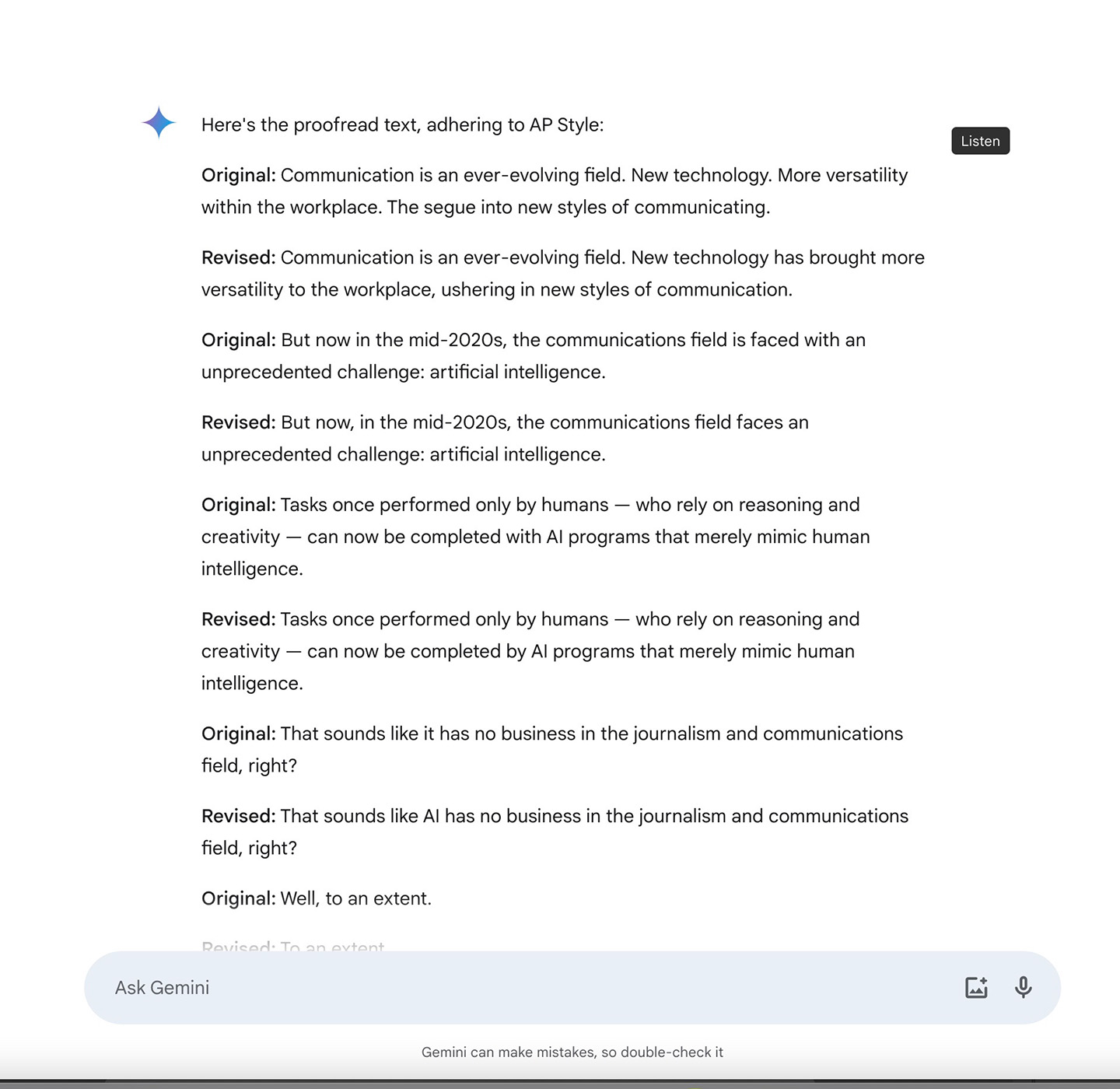

The headline to this article was created with the help of AI. The previous sentences were proofread using AI as well. But I’m not the only journalist using this tool.

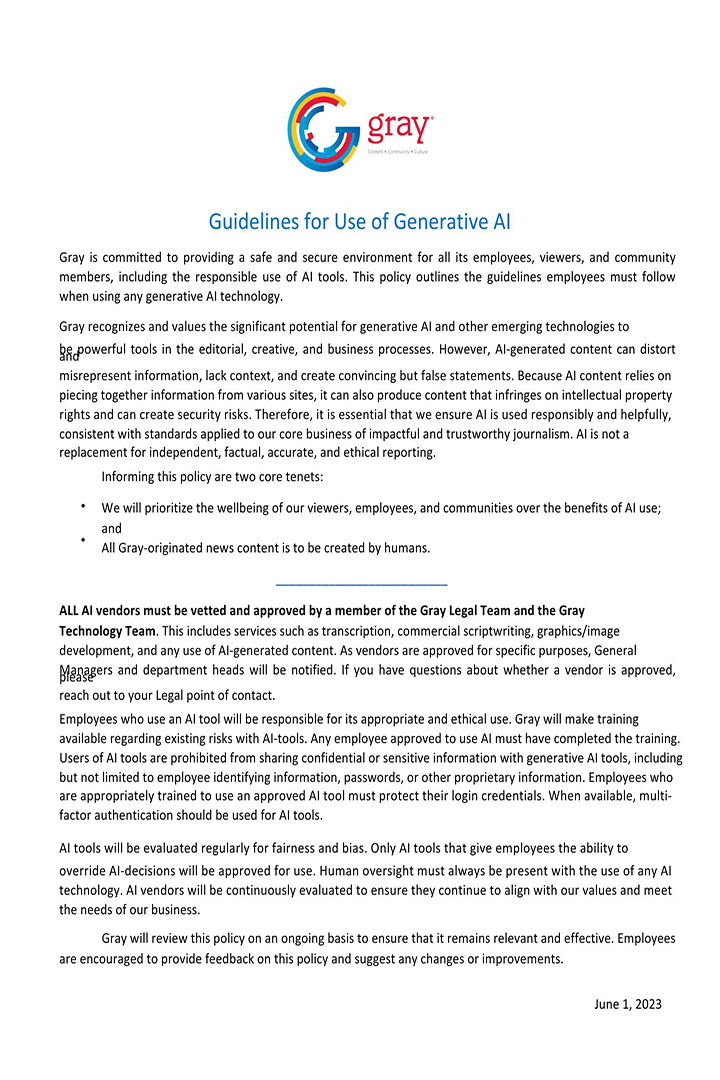

Gray Media, a nationwide media company, released its public-facing policy about the usage of artificial intelligence in its media. One of the members of the committee that put this policy together was Robby Thomas, the vice president and general manager for WIS-TV in Columbia.

“I think a fundamental understanding of what artificial intelligence is and how it works will be very helpful in a journalism career,” Thomas said. “I do think that the discretion of a professional journalist is something that still needs to be a part of the equation.”

Discretion is a term typically linked to the usage of AI, and it’s an even bigger deal with journalistic work. But at WIS-TV, its policy is very clear: AI mustn’t do the work for the journalists.

But make no mistake, Gray Media and other media companies do see value in using AI selectively, including when transcribing or even reformatting stories for different mediums.

“In very specific instances, we may allow a story in another format to be re-written for social media or other platforms,” Thomas said. “But all original journalism must be created by humans and the originating journalist must review and give final approval on all versions. They have final responsibility.”

Thomas described his approach to AI as “bullish” but emphasized that the company always prioritizes the wellbeing of its communities over the benefits of AI usage.

So, what other ways does AI fit into the communications field if it’s not being used to generate content?

‘A tool, not a format’

Jenny Dennis is the president and chief content officer of Trio Solutions, a Charleston-based marketing agency.

At her agency, AI is not a hurdle. It’s a new frontier.

“For us, AI has been pretty transformative,” Dennis said. “It can provide good inspiration. It can really help kick-start ideas. We can use it to generate content, to analyze data. We can use it to help get deeper on audience personas. We can even use it to help with some predictive analytics.”

It’s something that sets TRIO from other agencies in the Charleston area, but it’s also something the company broaches with tact, much like WIS-TV.

“I think the most important thing is that it’s a tool and not a format,” Dennis said. “We use that just like we use other tools that we’ve used for a long time, technology-wise, and we don’t let it overtake the need for the human brain.”

Dennis said a lot of campaigns and audience targeting has been assisted by artificial intelligence.

She said company employees have used AI to assist with matching the voice of clients for social media content, to generate potential ideas for their own content and to aid with research. But much like WIS-TV, TRIO always ensures human eyes are the last to see content prior to publication.

“We want to make sure that we’re still exercising that muscle, that we’re staying creative, and that we’re keeping our integrity and not doing a copy and paste situation,” Dennis said. “We’re really just allowing it to help us be more efficient and to help us see something maybe from another perspective, and then use the skills and talents that we already have to make that even better.”

As AI technology continues to develop, many professionals think the momentum will continue.

“I don’t know that there’s any industry that is fully ahead of artificial intelligence and what it’s capable of, and I’m including the technology companies into that,” Thomas said. “I think our regulations and laws are woefully behind, and you’re going to see us continue as a society to adapt to the new possibilities and potentially threats that artificial intelligence creates.”

And while the professionals feel comfortable about where they are in the space, the next generation of journalists appear to be a bit more concerned about those potential threats.

AI in journalism: A step too giant?

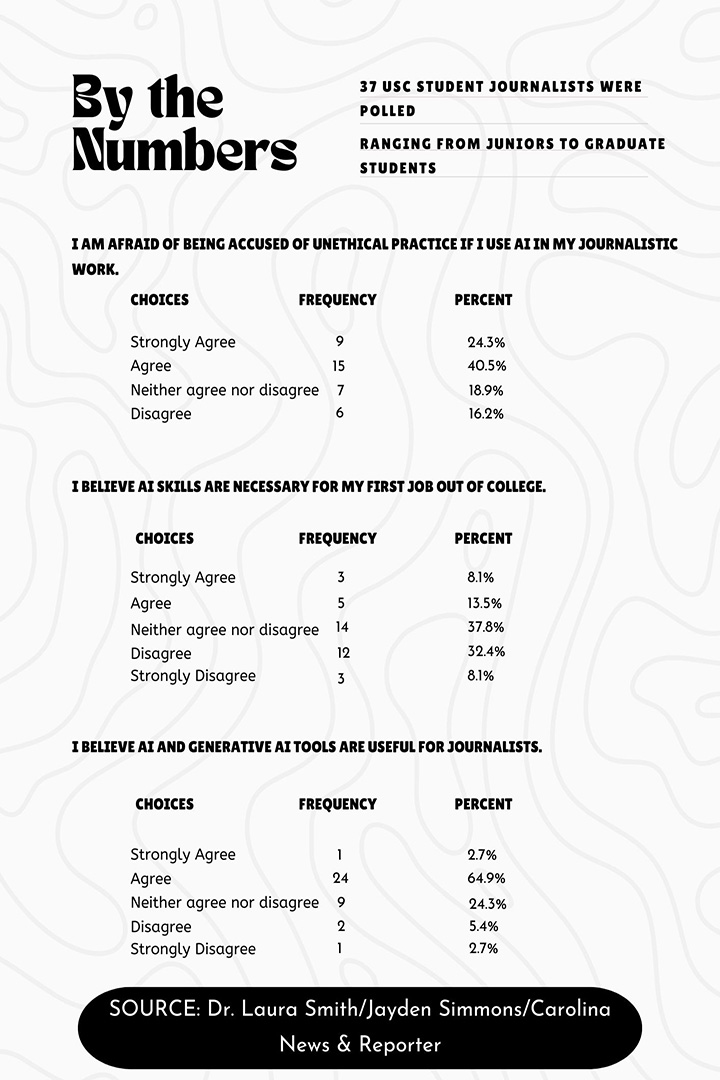

Dr. Laura Smith, a senior journalism instructor at the University of South Carolina, did a survey of the journalism school’s in-house news team at the beginning of the semester.

The results? A lot of apprehension.

“They’re not absolutely against it,” Smith said. “But they’re worried about it being misused or not carefully used enough. And then also in that camp (are) people who are really worried about what it will mean to jobs. And that’s probably the biggest ‘nay’ camp.”

Smith said the survey found some were worried “as soon as we make (AI) good enough, people will lose their jobs.”

In a poll of 37 USC student-journalists ranging from juniors to graduate students, 64.8% said they were afraid of being accused of unethical practices if they were to use AI in journalistic work.

Prior to instructing, Smith was a producer at WFLA-TV in Tampa, Florida, from 1987 to 1991. While AI is far from the first cutting-edge technology to affect stations, Smith said the shift to AI in the newsroom, alongside the reduction of positions, has contributed to students’ concerns about finding full-time positions in the news industry.

“When I was producing news, it took 13 people to physically get my news product up on the air. That’s done with three people today,” Smith said. “If Adobe Premiere can edit your story for you, why do we need editors? If AI can write the story for you, why do we need writers?”

Smith’s survey also showed there’s a general unease with using AI in the newsroom due to worries that it could take away from the essential skills needed to be a reporter. Some 40.5% of surveyed student-journalists said they do not believe AI skills will be necessary for their first job out of college.

“Using tools that help you do one or two of those tasks, especially helping people find your article through search engine optimization, is critical,” Smith said.

Smith pointed to student-journalists’ conundrum.

“‘How am I ever going to learn to write a headline if I let AI write my headlines?’” Smith said the students asked. “‘If I let AI do this work for me, it’s a crutch, and it may hurt me in the long run instead of help me.’”

Indeed, some believe ignoring AI’s emergence would be a mistake.

“I think it’s smart to have a working knowledge of how to use it,” Thomas said. “I think that students, especially students in school right now, are out on the very cutting edge of this technology, and it’s going to affect their careers. So, the more you can learn about it now, the better.”

But where is that line of ethics drawn?

Smith said some things are clearly unethical, such as passing off work completely done by AI as your own. But there are some gray areas, such as using your own voice in a voiceover generator for a news package.

“You don’t have to spend all that time in the booth recording and rerecording and doing something over and over again that you’re not interested in or (don’t) have the time for,” Smith said. “It makes things so much faster. If you can do it for yourself, how does your audience know that’s actually you? And what’s to stop your media organization from doing more of that with your voice.”

So … What’s Next?

Coca-Cola recently faced backlash for its AI-generated Christmas ad campaign.

Much like its previous holiday ads, the new ads are full of lights, snowy rooftops and of course Coca-Cola trucks lining highways to deliver Santa his drink of choice, but no human faces or voices. Dennis and TRIO have their eyes on the ads’ reception.

“I think that some folks in the marketing industry would almost argue that they saved time and resources and were able to produce things for a fraction of the cost,” Dennis said. “But where’s the human element to that?”

Thomas said the lack of that human element in generative AI content could cause a shift within the communications industry altogether.

“That immediately threatens humans whose livelihood is built around being voiceover actors,” Thomas said. “I think that’s why you saw Hollywood’s writers and directors and actors go on strike last year. I think there’s ethical implications with that, that we should all take very seriously.”

Robby Thomas aided Gray Media in crafting its public policy on the usage of AI in media content. (Photo provided by Robby Thomas/Carolina News & Reporter)

Services such as Gemini AI or Chat GPT can proofread stories, emulate writing styles or even suggest headlines. (Gemini AI photo captured by Jayden Simmons/Carolina News & Reporter)