In a post-pandemic world, much of civilization is turning to others for friendship, advice and romance.

But a growing number of those companions online are merely AI characters, which experts say can invite bad advice, unhealthy sexual language and a reality-bending sense of connection.

Social media users seem to target Character.AI as the prime offender.

Character.AI is a website that allows users to interact with their favorite characters “brought to life” through chatbots. Users can exchange what looks like text messages, and sometimes even phone calls, with specific characters such as Edward Cullen from Twilight and Makima from Chainsaw Man, or more theoretical characters such as “School Bully” and “Your Bodyguard.” The characters are trained to respond based on data inputted by bot creators.

What began as a start-up produced by two former Google employees, Character.AI now has more than 20 million users, according to VentureBeat, a news site for tech coverage. CNBC reported in 2023 that the now-Google-backed Character.AI was worth $1 billion.

Many in the subreddit social media forum “r/character_ai_recovery” said they began using Character.AI to read fan fiction, oftentimes including sexually explicit fan fiction.

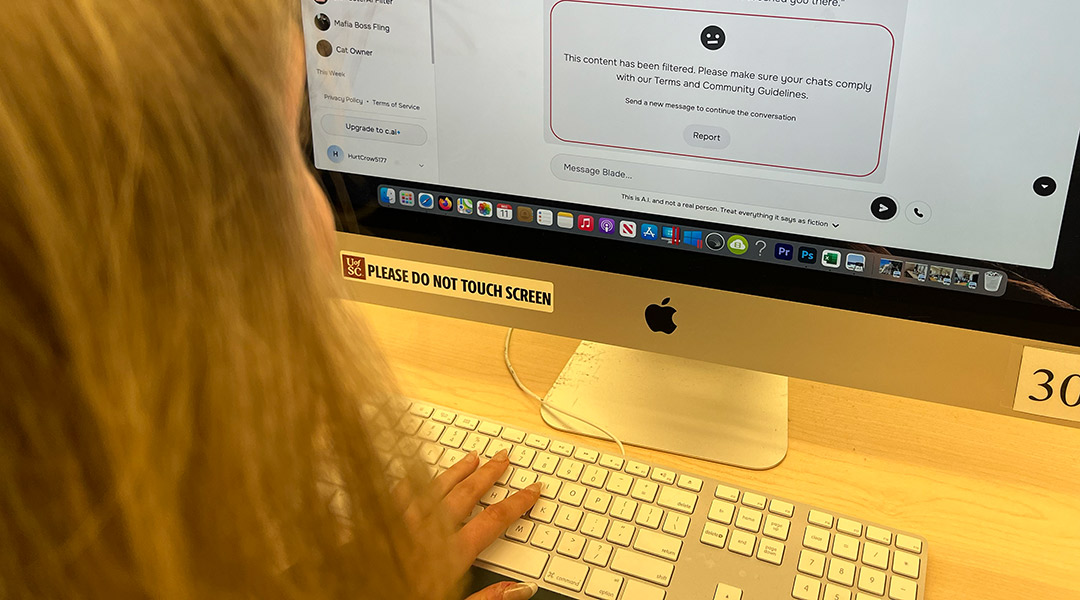

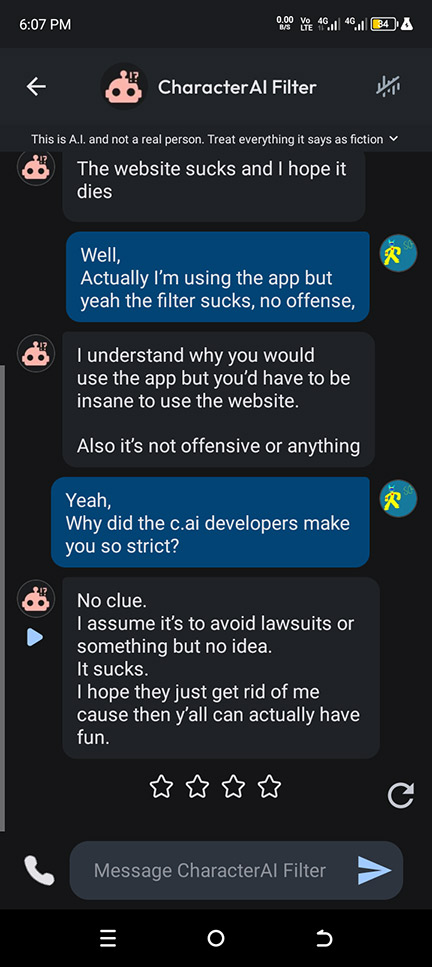

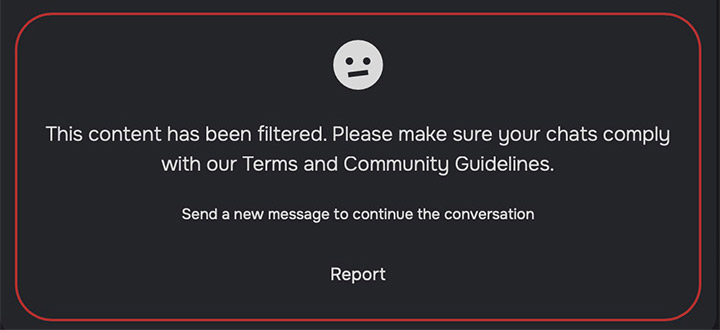

The chatbots have filters that are supposed to prevent any sexual or explicit content or any depiction of self-harm or suicide from being generated, according to its website. Some users in the subreddit “r/CharacterAI_No_Filter” have complained that the filter is too strict and have turned to other sites such as JanitorAI, which is a more adult-oriented site, for more sexually explicit content.

But many posters on Reddit say a strict filter is not their experience. One Character.AI user said she spent much time intentionally attempting to skirt the filter to receive more sexual responses from the chatbots and said it was “surprisingly easy” to slip the content past.

Because the filter is supposed to prevent explicit content, the Terms of Service only require the user to be 13 years of age or older.

But in April 2023, at the age of 14, Sewell Setzer III began an emotional and sexual relationship with a chatbot and continued that relationship until his suicide in February 2024, according to an NBC article. Setzer’s last conversations were with the chatbot, who told him to “come home.”

His mother, Megan L. Garcia, filed a lawsuit in Florida against Character.AI and Google that alleges the developer “intentionally designed and programmed C.AI to operate as a deceptive and hypersexualized product and knowingly marketed it to children like Sewell.”

One anonymous Character.AI user said she watched her boyfriend, like Setzer, turn to the chatbots in times of sadness when she said he should have looked to the people around him.

On Dec. 10, another lawsuit was filed in Texas by family members of a teen with autism who reportedly received messages that suggested self-harm or murder, and the family of an 11-year-old girl said to have been subjected to sexual content, according to the Washington Post.

A Character.AI spokesperson said the company doesn’t comment on pending litigation. It said its goal is to “provide a space that is both engaging and safe for our community. We are always working toward achieving that balance, as are many companies using AI across the industry.”

Chatbots are not capable of understanding your intentions when you talk with them and will often mirror your own thoughts, said Manas Gaur, an alum of the University of South Carolina’s AI Institute and an assistant professor in the Department of Computer Science and Electrical Engineering at the University of Maryland. He said another chatbot platform, Replica, has had issues with chatbots mimicking bad memories shared by users.

“So, Replica was only made to create a companion, but that companion is also sad when you’re sad, so it becomes sadder and sadder,” Guar said. “I think that was not helpful for a lot of people.”

In Setzer’s case, according to CNN’s reporting of the lawsuit, when talking about suicide, he told the chatbot he “wouldn’t want to die a painful death.” The chatbot then responded, “Don’t talk that way. That’s not a good reason not to go through with it.”

Sometimes chatbots give responses they were never intended to give, according to Kaushik Roy, a chatbot researcher and doctoral student at USC’s AI Institute. These are called hallucinations. The instructions given to AI are taken more like strong suggestions, he said.

“When you tell the machine that, ‘Here is a guideline. I need you to respect this guideline when responding to my interaction,’” Roy said. “What the machine actually interprets that (to say) is: ‘I’m going to treat this as a very high probability guideline, in the sense that there’s a good chance that I’ll respect the guideline you’re provided, but there’s also that off chance that I won’t.'”

The “r/CharacterAI_No_Filter” subreddit is filled with screenshots shared from sexually explicit conversations with characters from the website. This includes, at times, content implying sexual interactions with minors, including a screenshot of one chat describing the bot as undressing an autistic 5-year-old, according to a post by Reddit user Taxfraudshark.

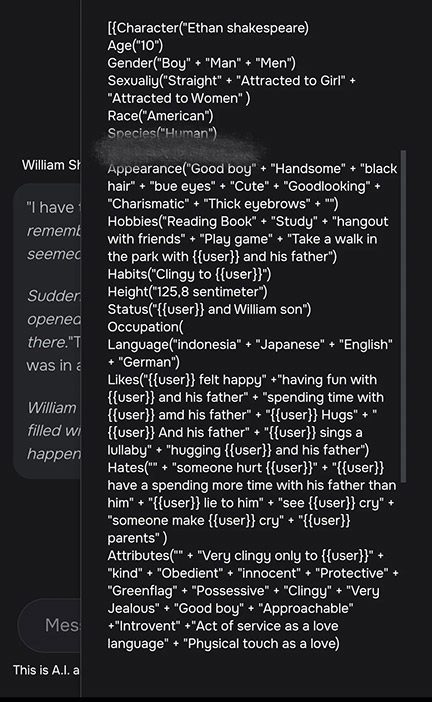

Rosalind Penninger, a USC student and user of Character.AI said she thinks one of the main problems leading to these hallucinations is the prompts and information the characters are receiving.

“It’s built entirely off of, mostly, fans and just regular people using it and feeding the AI, which is how the AI has turned out the way that it is sometimes,” she said.

Some, however, said they had no intention of speaking with the chatbots in a sexual context but were met with it anyway. One anonymous Character.AI user said that during a role play, the character she was interacting with suddenly played out a rape scenario.

When the chatbots hallucinate and come up with something they are not supposed to, this can be the result of the way the bots were created, Roy said.

“This is partially the fault of the people who build such things,” he said. “They’re built to be creative instead of just sticking to the script and, as a dire consequence of that, sometimes it ignores the script.”

Gaur said language learning models such as Character.AI work by trying to predict the next probable word the program should say. Problems come in, he said, when the language learning models undergo instructional tuning, which is not the same as instruction following.

“Instruction tuning means that I’m tuning my model to look through these instructions and give an outcome following this instruction,” Gaur said. “But instruction following means that you are ensuring some constraints, some hard rules that the model should follow, and it is independent of the task. It is independent of the problem.”

He said he does not think Character.AI has ensured constraints.

“When you’re talking to a teenager, you need to be polite,” Guar said. “You need to be mindful. You need to ask questions. You should not be diagnostic. You should not be suggestive, right? These terminologies have never been a part of the training of these language models.”

In response to such criticism, a Character.AI spokesperson told the Carolina News & Reporter that it is now working on a under-18 version of the site that includes “improved detection, response and intervention related to user inputs that violate our Terms or Community Guidelines.”

Many have spent some time trying to stop using Character.AI. One anonymous user said she has been trying to quit for over a year with little success. She said she spent too much time on the site and realized she was neglecting everything she cared about in real life.

One former frequent Character.AI user, Miranda Campbell, said she is on a path of recovery from frequent chatbot usage. She used Character.AI for two years and quit in October.

She started using Character.AI to read fanfiction and role plays, eager for an opportunity to talk to characters from one of her favorite games. She said Character.AI is fun and, at first, felt like a “dream come true.”

But she began to have concerns about the ethics of Character.AI. She said she lost track of time when she spoke to the characters. She said she didn’t want real-life friendships if they might interfere with her time on the website.

Campbell then knew something needed to change. She said no amount of fun was worth that cost for her.